Introduction

As artificial intelligence (AI) continues to advance, there is a growing need for explainability - the ability to understand and interpret how AI models arrive at their decisions. This is especially important in high-stakes scenarios such as healthcare, finance, and justice systems, where the consequences of incorrect or biased decisions can be dire.

There are at least four distinct groups of people who are interested in explanations for an AI system, with varying motivations -

Group 1: End User Decision Makers

These are the people who use the recommendations of an AI system to make a decision, such as physicians, loan officers, managers, judges, social workers, etc. They desire explanations that can build their trust and confidence in the system’s recommendations.

Group 2: Affected Users

These are the people impacted by the recommendations made by an AI system, such as patients, loan applicants, employees, arrested individuals, at-risk children, etc. They desire explanations that can help them understand if they were treated fairly and what factor(s) could be changed to get a different result.

Group 3: Regulatory Bodies

Government agencies, charged to protect the rights of their citizens, want to ensure that decisions are made in a safe and fair manner, and that society is not negatively impacted by the decisions.

Group 4: AI System Builders

Technical individuals (data scientists and developers) who build or deploy an AI system want to know if their system is working as expected, how to diagnose and improve it, and possibly gain insight from its decisions.

Enter human in the loop (HITL), a method of having humans involve and interpret the output of AI models. HITL can help improve the transparency and accountability of AI systems, and ultimately build trust with end-users.

But how exactly does HITL for Explainability work in practice? Let's take a closer look

Providing Interactable interface to deep learning models at inference time

Once model is built with the certain data to solve a specific task, the specifications will change continuously for the same user or one user to another user. With this approach we can provide controllable parameters to the users, so that they can configure their specifications to control model behavior, instead of rebuilding their models. Providing controllable parameters to users, so that users can control AI models decisions, based on several parameters in consideration like domain knowledge, experience, situation, possibilities, etc.

Procedure -

1. Identify possible hooks according to the given problem.

2. Prepare dataset with hooks and explanations.

3. Train the model.

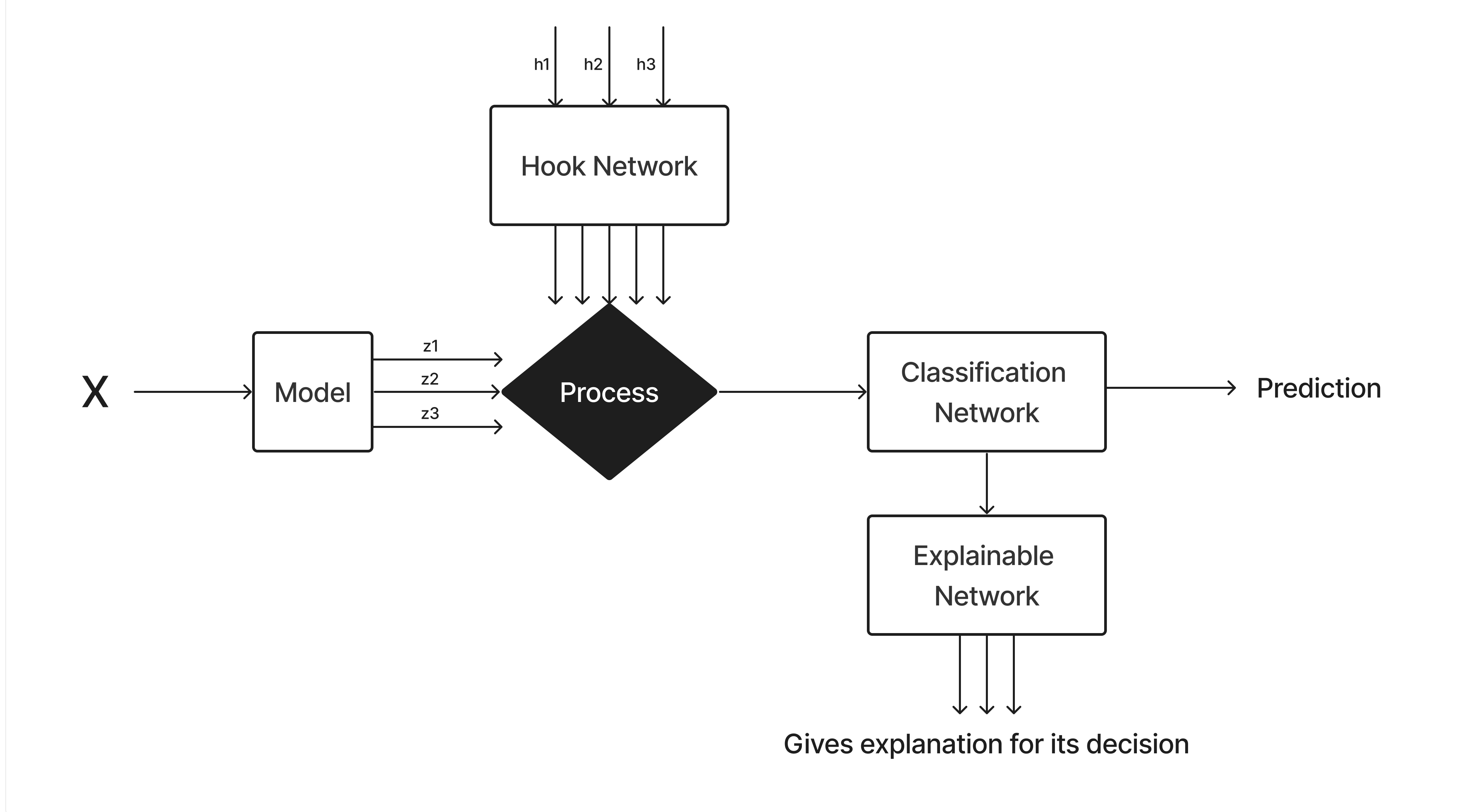

Framework -

In this framework , we are providing latent discrete variables(hooks) as a controllable parameters to users. While training the model, the hook network finds the relation between the given hooks and the latent space provided by the prediction network. With these representations from prediction network and the hook network, the classification network is trained end to end. To the classification network an explainable network is attached , which gives explanations in terms of latent discrete variables.

Reference: https://arxiv.org/pdf/1907.10739.pdf

Reference: https://arxiv.org/pdf/1907.10739.pdf

Example -

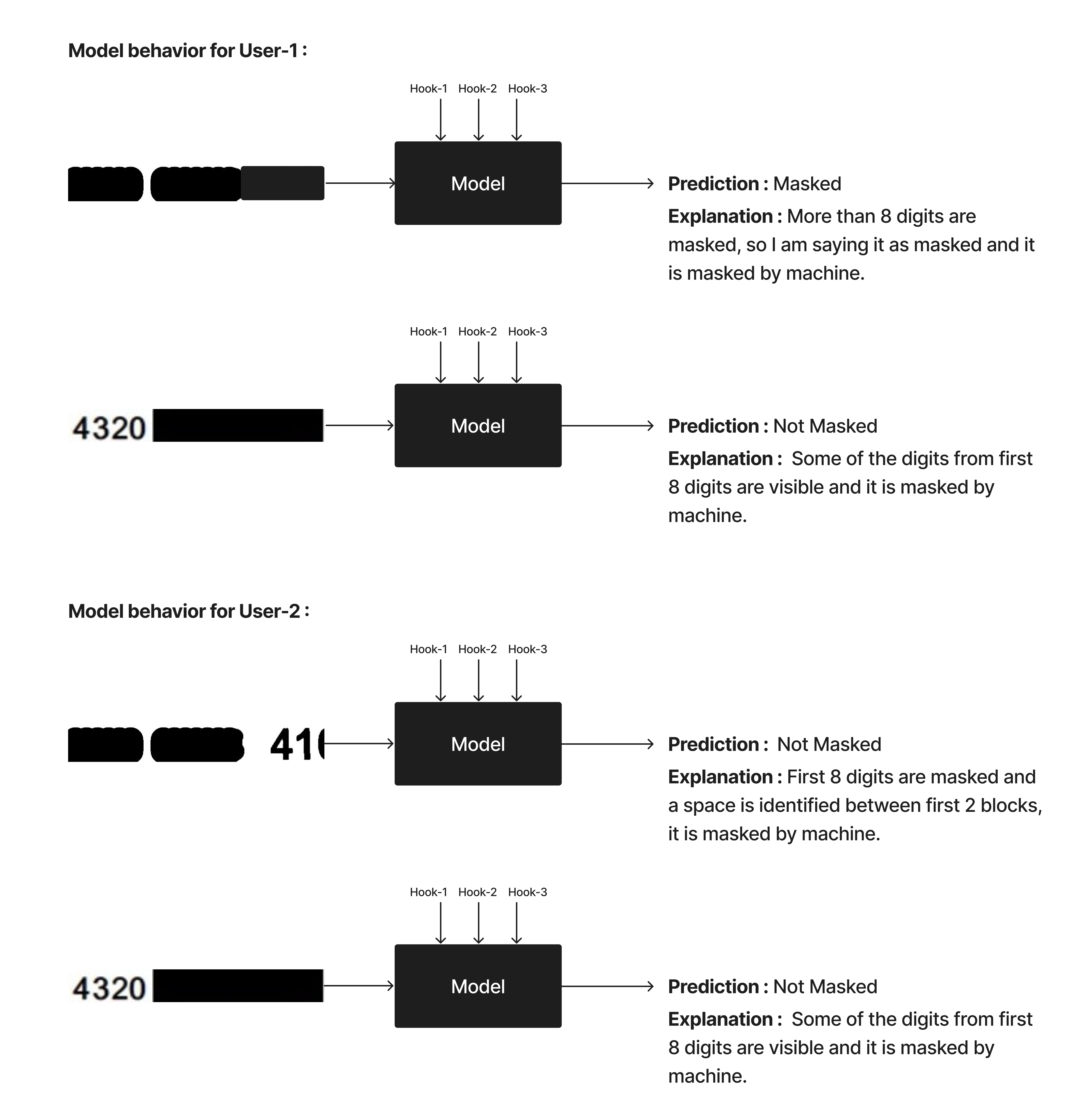

Let's take the same example of Aadhar Redaction model(find aadhar number is masked or not), here's a list of possible hooks -

Hook-1 : If space is identified while masking the first 8 digits , even though first 8 digits are not visible.

Hook-2 : If more than 8 digits are masked.

Hook-3 : If first 8 digits are masked by human not machine.

Provided configuration template for users :

Hook-1 :

If the space is identified while masking the first 8 digits , even though first 8 digits are not visible .

User-1 perspective : In this case even though space is visible first 8 digits are not visible, I can consider it as masked as it is not violating company rules.

User-2 perspective : In this case if some space is visible in the first 8 digits, there may be a chance that at least 1 digit might visible slightly. I don’t want to take that risk so that my people can verify it clearly.

Hook-2 :

If more than first 8 digits are masked

User-1 perspective : In this case if the first 8 digits are not visible, I can consider it as masked.

User-2 perspective : In this case if last 4 digits are not visible, we cannot add them to db, we need to take other procedure to handle these cases. We cannot pass them through the application.

Hook-3 :

If the first 8 digits are masked by human not machine

User-1 perspective : If first 8 digits are not visible , either human or machine it won’t make any difference for us.

User-2 perspective : If it is masked by human, we cannot take risk, it should be verified manually.

Now Users configured these parameters for production deployed model.

We can also use this hook network for time series, we don't need to build multiple models for different specifications from the user. The user specifications may include - data of different time intervals, different window sizes, etc.

Conclusion :

In conclusion, Human in the loop is an essential component of achieving explainability in AI. By having humans involve and interpret the output of AI models, businesses can improve the transparency, accountability, and performance of their systems. This can ultimately lead to greater trust and adoption of AI technologies, and help businesses stay ahead in an increasingly competitive marketplace.

CONTACT US

Want to know more about our products?

AVAILABLE TIMINGS

- Monday - Friday : 9:00 AM - 5:00 PM

- Saturday - Sunday : Closed